Last week, the word petaflop was dropped multiple times during NVIDIA founder and CEO Jensen Huang’s GTC keynote. According to Gemini, a petaflop is “a unit of measurement for a computer's processing speed. It stands for quadrillion (one thousand trillion, written as 1,000,000,000,000,000) floating-point operations per second.” The context in which it was used by Huang was the introduction of their new Blackwell GPU (Graphics Processing Unit), which offers up to 20 petaflops of processing power. These chips can be deployed in the tens of thousands in the NVIDIA architecture.

Comprehending these numbers is a feat of intellectual contortionism out of the grasp of most, but consider this. These super-processors are capable of dramatically accelerating the training of Large Language Models (LLMs) and creating exponentially better generative AI applications that will make those available today appear infantile by comparison. What does “exponentially better” mean, exactly? Speed, availability, and security will be table stakes. Improvements in these areas will likely be identified and monitored on AI by AI.

The feat of human ingenuity and engineering required to develop this hardware is also the same foundation of innovation that will enable AI to improve itself. The new generation of AI will be both coach and athlete; teacher and student. But that’s only part of the picture. It’s all well and good for AI to know how to use AI. What about the billions of people who will interact with AI via a variety of interfaces–web, mobile, wearable, augmented reality, and in cars of the future?

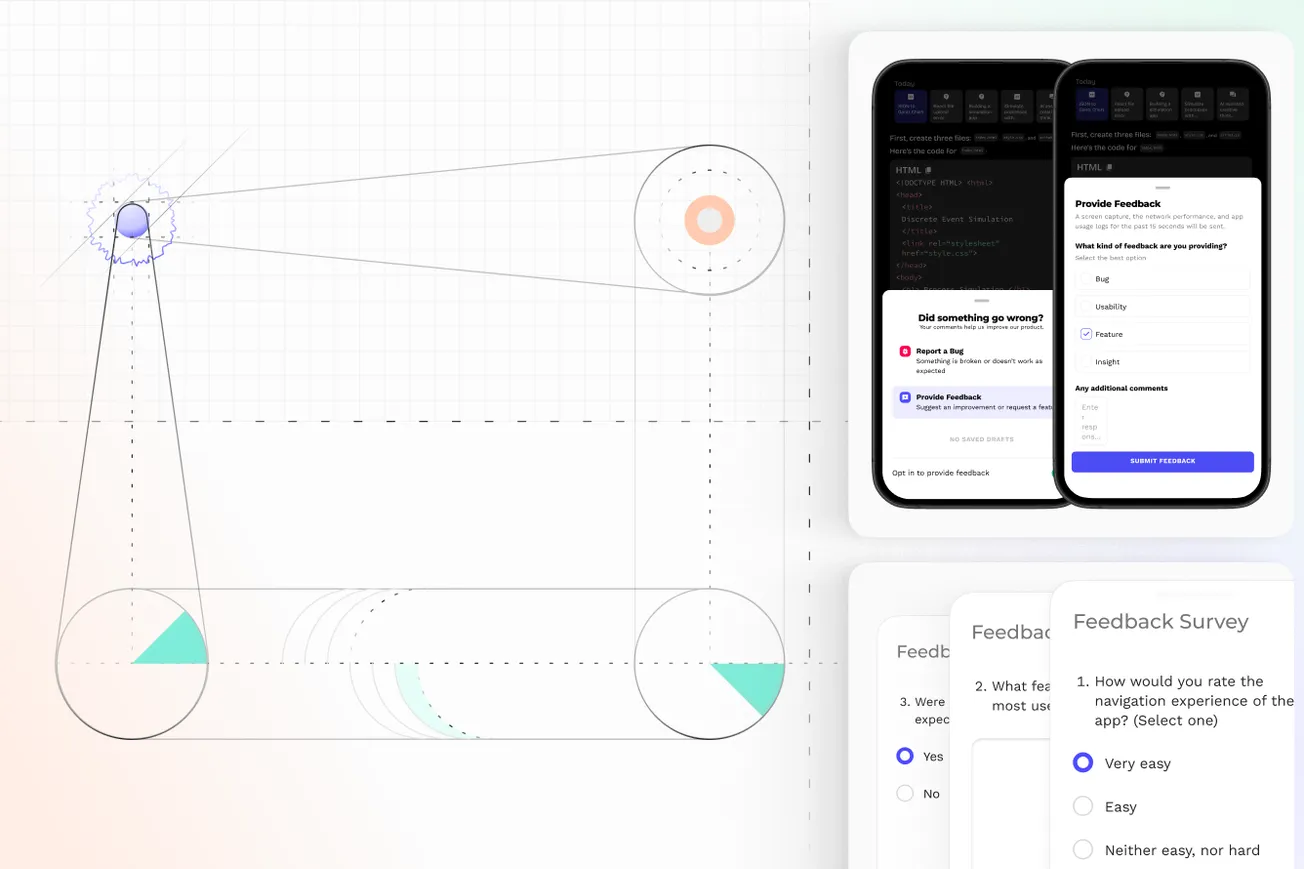

Issues surrounding factual accuracy, bias, and trust will increasingly require human-led UX research, design, and testing. The people designing these systems and studies will require a deep and extensive understanding of the intersection of AI and UX that simply didn’t exist a few years ago. The work we do at Pulse Labs bridges this gap. Our tools and processes were specifically designed for UX research, to help evaluate how human users experience artificial intelligence.

We work with some of the most innovative AI companies in the world and have a platform uniquely positioned to collect and categorize observational and naturalistic data about generative AI products. If you are a researcher working in AI and UX we want to hear from you and share knowledge with you. If you’re a company building AI products on LLMs, reach out and ask about our thoughts on generative AI UX research.