In the last article, ‘The Complete Picture: Solving the Puzzle of Research Fragmentation’, we established that alignment provides the ‘complete picture’ — the shared framework that brings all your research pieces into focus. But even with a clear picture, a persistent challenge remains: getting the pieces to move and talk to each other.

Research shows enterprise teams often operate across five or more platforms, spending significant time transferring data and coordinating stakeholders. These inefficiencies don't just slow projects — they risk the integrity and quality of insights.

The cost of these fragmented systems is clear: 82% of enterprises report that data silos disrupt critical workflows, and 68% of enterprise data remains unanalyzed. This is where data‑led integration redefines how organizations approach research.

The Problem: Research Silos Are Data Dams

Alignment solves the ‘who sees the data and how, but integration solves ‘where the data lives and how it flows.’

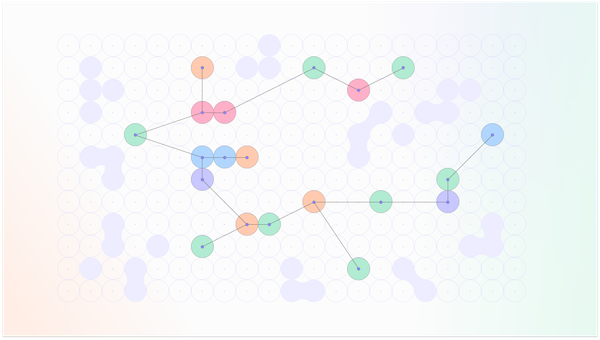

Imagine a study where participant screening happens in Tool A, interviews are recorded in Tool B, and analysis occurs in Tool C. Each manual transfer — download, upload, or handoff — introduces risk: data can be lost, corrupted, or misinterpreted. Projects stall as researchers wait on IT or other manual intermediaries to manage cumbersome exports, while pulling sensitive PII out of governed systems creates compliance vulnerabilities.

When data is trapped in individual tools across multiple platforms, your research ecosystem becomes a series of data dams, blocking the flow of insight. According to TDWI, many organizations struggle to achieve timely information delivery because of outdated integration tools, which slows decision-making. Researchers spend less time analyzing and more time on administrative coordination. Insights grow stale by the time they are aggregated, and teams lose trust in the integrity of their own data.

Even when data exists across platforms, differences in schema, definitions, or governance increase the risk of misinterpretation or duplication. Legacy tools like spreadsheets compound these risks, with organizations using fragmented, manual processes facing significantly higher project failure rates.

The Solution: Integration as a Seamless Pipeline

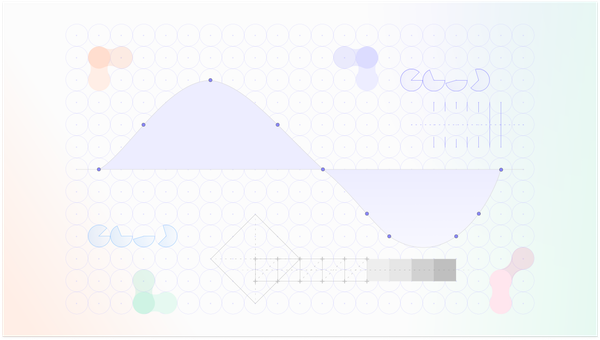

Data‑led integration transforms disconnected tools into a high‑velocity research pipeline, where every piece of data, from a single participant response to a large-scale survey, flows automatically and securely to where it’s needed.

Three pillars make this possible:

- Deep, Two‑Way Connections: Native integrations allow data to flow seamlessly in both directions. For example, participant availability from a CRM can automatically update recruitment status in real-time. This reduces manual coordination and ensures teams always operate with the most up-to-date information. This foundation supports flexible execution models — whether teams run research entirely themselves or collaborate across internal departments — without introducing dependency on manual data handling.

- Centralized Governance & Security: Data moves within a protected, governed environment. Security and compliance tagging travel with the data, reducing the risk of PII exposure. IBM notes that ‘policies that move with the data’ are critical for modern enterprises seeking agility while maintaining trust. A governed, consistent environment ensures that all contributors operate with accurate data, regardless of how teams structure their workflows.

- Automated Workflow Triggers: Integration goes beyond movement: it triggers action. Completed interviews can automatically flow into transcription, categorization, and analysis, replacing slow human handoffs with reliable automation. Self-service integration empowers teams to access and prepare data without heavy IT involvement, cutting time-to-insight significantly. This automation supports self-serve research, enabling teams to manage study cycles independently and at speed.

By embedding integration logic early and designing native two-way connectivity, organizations can reduce errors, accelerate cycles, and scale research efficiently. This is particularly important in fast-moving markets where delayed insight can compromise decision-making. Integrated systems give teams the agility to execute research in whichever model they choose — fully internal, hybrid, or distributed — without sacrificing data quality or velocity.

Redefining Research: Accuracy, Efficiency, and Trust

Prioritizing deep integration delivers three key benefits:

- Accuracy: Fewer manual transfers mean lower risk of data corruption or misinterpretation. Stakeholders can trust that insights reflect reality. Consistency is maintained regardless of how teams allocate responsibilities across their organization.

- Efficiency: Automated workflows and real-time pipelines shorten research cycles. Projects can move from planning to insight in days, not weeks. Teams can operate independently at scale, without bottlenecks introduced by manual dependencies.

- Trust: When data flows reliably and is governed automatically, stakeholders from researchers to executives gain confidence in decision-making. Unified, governed data builds trust across all contributors involved in the research lifecycle.

Integration turns alignment from a strategic vision into daily operational reality, powering the connected research ecosystem and moving organizations from slow, siloed reactions to fast, unified decisions.

Next in the Series: Integration’s Final Mile

We’ve defined the problem, quantified the cost, created the blueprint, established alignment, and engineered data flow through integration.

The final article will explore the last mile of the Connected Research Ecosystem: Action: turning insights into measurable, continuous change across the organization.

Stay tuned for ‘The Last Mile – Creating Actionable Insight’.

Member discussion