In the rapidly evolving field of artificial intelligence (AI), a push for smarter, more adaptable systems is the highest priority of some of the world’s largest technology companies. However, as we strive for progress, we’re encountering a unique challenge: the risk of "inbreeding" within AI models. This term, borrowed from genetics, refers to the scenario where AI systems are trained and retrained on a narrow set of data or methodologies, leading to a lack of diversity in knowledge and capabilities. This inbreeding can stifle innovation, reinforce biases, and diminish the real-world, and more universal, applicability of AI.

AI inbreeding manifests primarily through data homogeneity, where models become over-reliant on specific types and sources of data, losing their ability to generalize and perform accurately in varied, real-world situations. Similarly, methodological inbreeding, or the limited application of a narrow set of techniques, can curb the development of robust and versatile AI systems. This challenge is akin to an echo chamber, where feedback loops reinforce the same ideas without introducing new perspectives, leading to less innovative and adaptable AI models.

The antidote to AI's inbreeding problem lies in the element it seeks to emulate: human intelligence and behavior. Human users offer an unparalleled source of diverse, complex, and naturalistic data, reflecting the real world's vast array of contexts, languages, and interactions. By leveraging human-generated data, we can introduce a rich tapestry of experiences and perspectives into AI training processes, ensuring models reflect more the multifaceted nature of human existence.

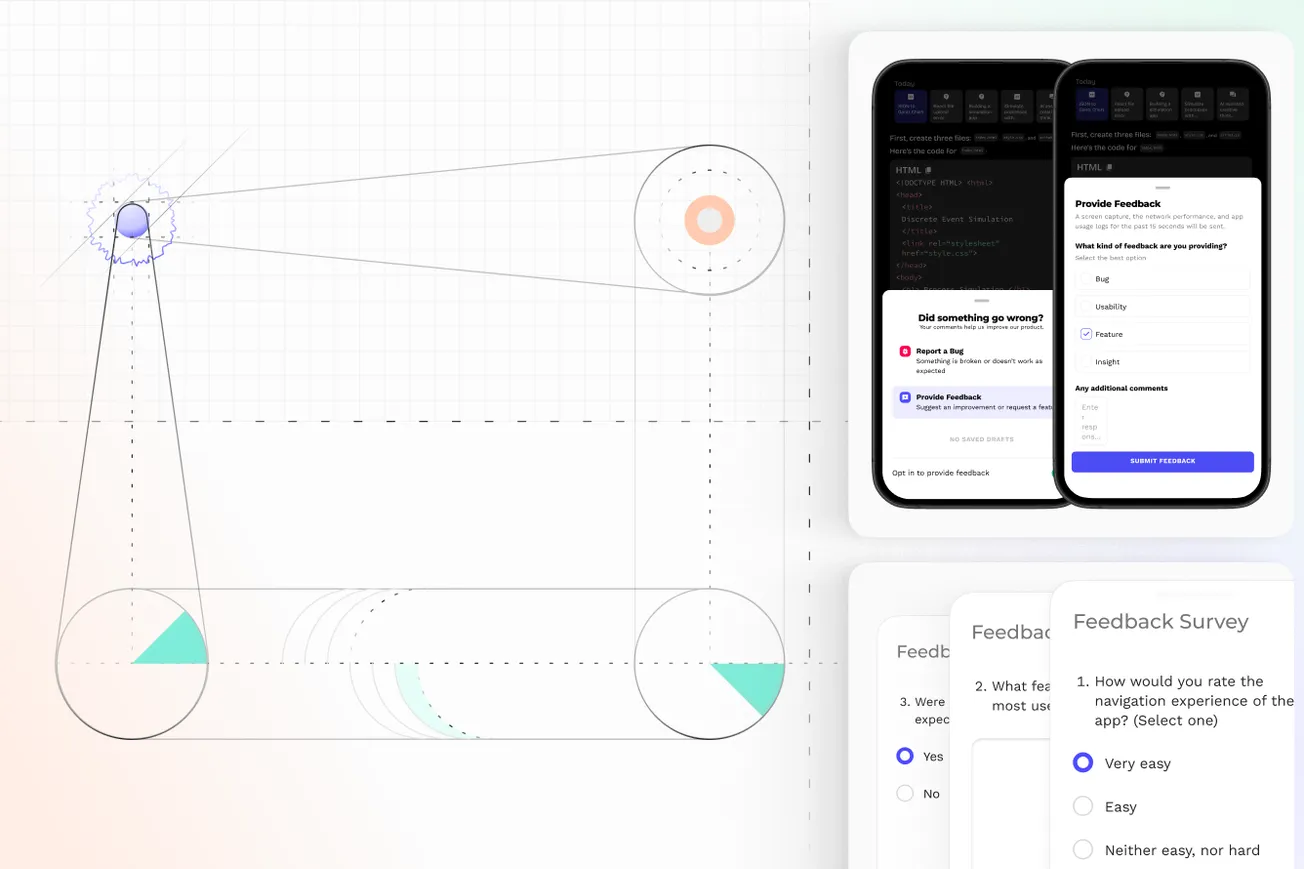

The good news is there are several strategies available for human-centric data collection, many of which we are employing at Pulse Labs for some of the world’s biggest technology companies. These strategies can include:

- Crowdsourcing: Utilizing platforms to gather a wide array of human insights, experiences, and judgments can significantly diversify training datasets.

- User-Generated Content: Tapping into the wealth of data produced by users offers real-world language and interaction patterns.

- Participatory Design: Directly involving users in the AI development process ensures the data and models align with genuine human needs and contexts.

- Interactive Learning: Systems that learn from real-time user interactions adapt continuously, benefiting from the natural language and feedback of human users.

- Gamification: Making data collection engaging encourages participation and creativity, enriching the dataset with high-quality, diverse inputs.

While human-centric data collection offers an effective solution to the inbreeding challenge, it's imperative to navigate this process ethically. Respecting user privacy, ensuring consent, and promoting data diversity are non-negotiable principles that underpin the integrity of this approach. These are core values we embrace as part of Pulse Voices–our global network of panelists.

The challenge of AI inbreeding is significant, but the solution is within our reach. By embracing the complexity, diversity, and richness of human-generated data, we can develop AI models that are not only more robust and innovative but also more aligned with the nuanced realities of the world they're designed to serve. The path forward for us at Pulse Labs is clear: infuse AI with the essence of human experience, and watch as it evolves into a more adaptable, insightful, and genuinely intelligent entity.

Want to learn more about the tools we use for our research and how they can help you power your product design and user experience?

Stay tuned for more insights from Pulse Labs IQ™ and sign up for our newsletter.