Hallucinations - an industry term used to describe the situation where an AI platform generates responses that are inaccurate or don't make sense but are presented as factual - is an area where platforms and the public may not see the same thing.

- Only 5% of user interactions rated are seen as containing hallucinations. Most responses are rated as accurate (95%).

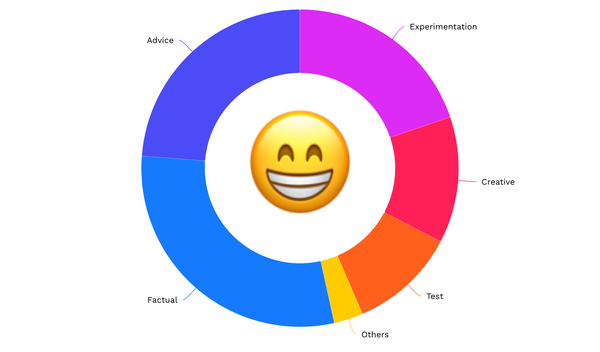

That said, hallucination rates more than double for “experimentation” and “creative” types of prompts: - Experimentation: to learn what the AI can do. E.g., tell me what my meal plan should be if I want to grow muscle.

- Creative: to create something new or unique. E.g., write me a song about fishing in the style of Michael Jackson.

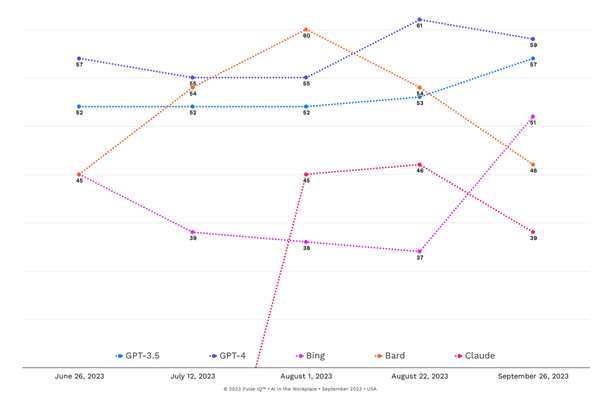

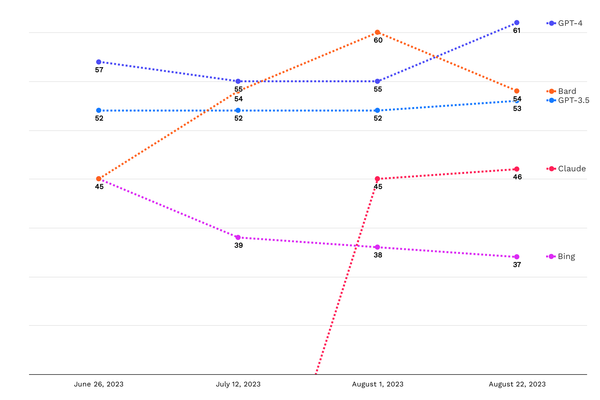

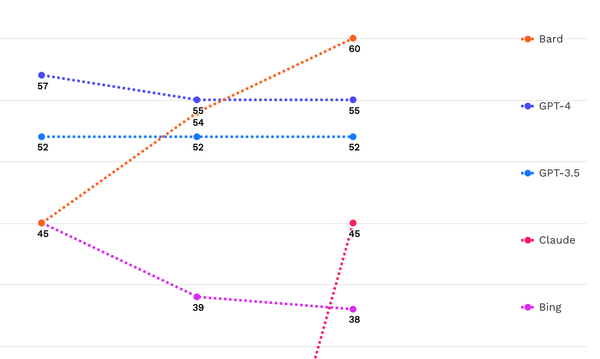

- In comparing platforms:

- 17% of users flag GPT-4 responses as possible hallucinations around “creative” prompts.

- Bard has the fewest reports of hallucinations in creative.

Implication

The gap around a platform’s ability to manage hallucinations will likely gain more attention in three areas:

1) The consumer knows the topic intimately (a plumber would more likely see a hallucination in a response about plumbing vs. aerospace).

2) In topics where higher accuracy is expected such as health, finance, and law.

3) Topics that are often targeted for misinformation campaigns and if any of that is creeping into the training data and responses.

Lastly, another factor impacting hallucination is the recency gap - or new news - as there are time-limits on training data (e.g., GPT-4 leverages training data prior to October 2021, so anything after that would not be included in their results at this time).

Member discussion