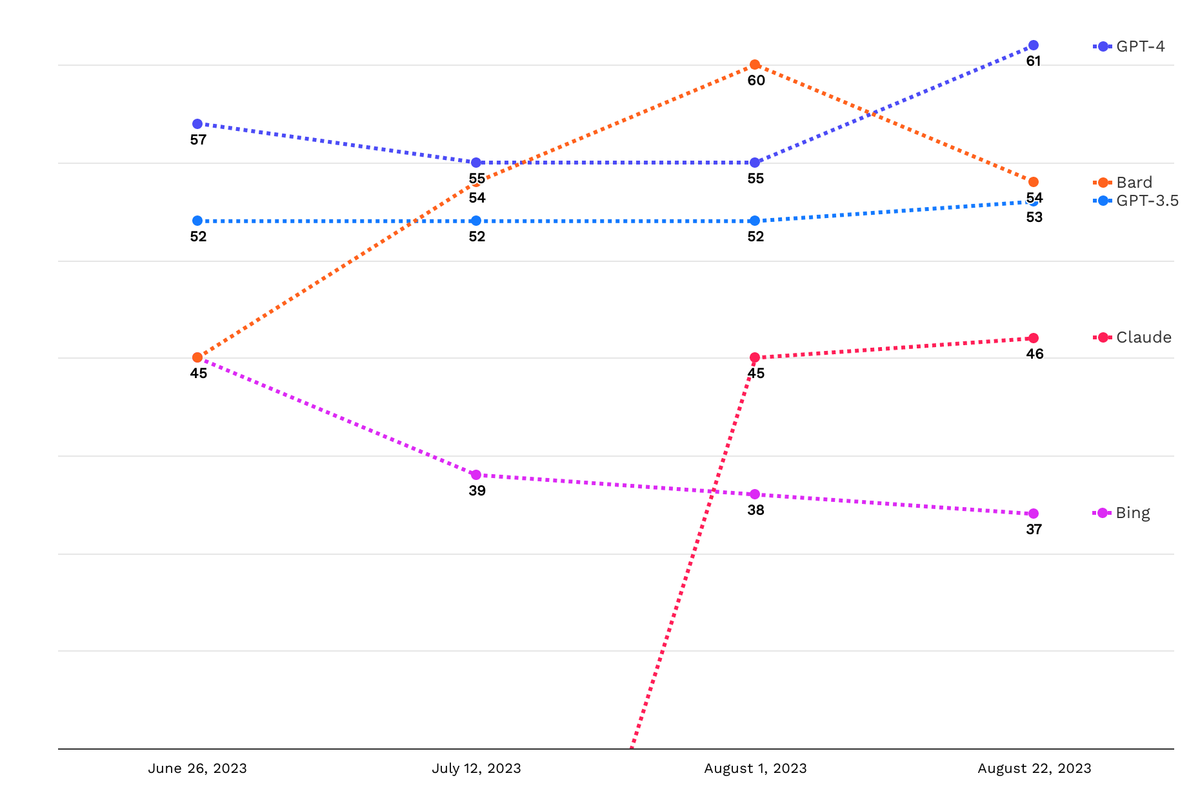

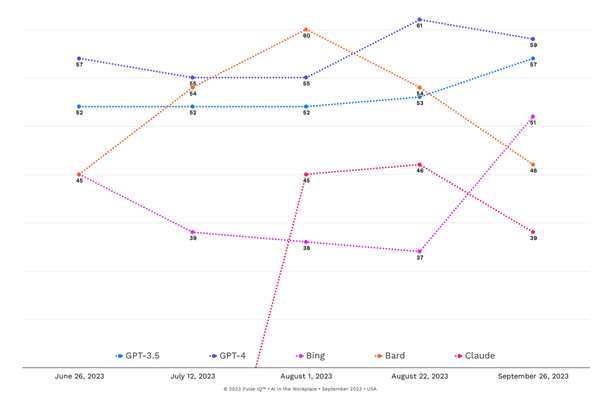

Bard and GPT-3.5 are close behind

As part of our Pulse IQ research, we have users compare prompts and responses from different AI platforms side by side. In the latest round of comparison, 61% of users preferred responses from GPT-4.

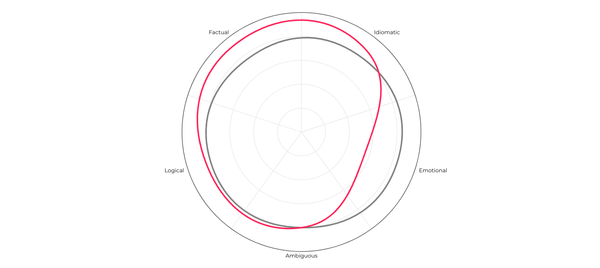

Overall

Users picked GPT-4 because they perceived the responses to be well thought out, transparent about the AI's limitations, and overall easy to read. People found the responses easier to comprehend because of the clear way information was presented.

I appreciate the use of buffering language at the beginning to soften the authority of the information.

—Millennial

It came down to the bottom (GPT-4) one being more specific. It mentioned Khan Academy for practice problem help, which is a great and helpful suggestion. The top one also suggested practice problems, but mentioned no specific sites or examples.

—Millennial

I prefer the layout and structure of this response. It clearly identifies the goal and then gives multiple "action" bullet points below to help achieve that goal. This is well done and lays out like a checklist.

—Gen X

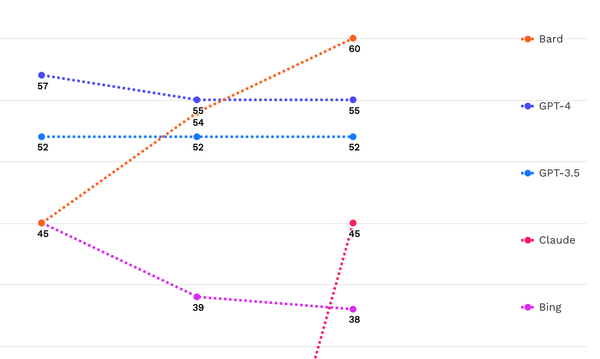

GPT-3.5 vs GPT-4: GPT-4 narrowly wins

When users compared GPT-3.5 and GPT-4, it was a close contest. 53% of the time, users preferred GPT-4 versus 47% for GPT-3.5. Users found GPT-4's responses to be better structured and easier to read.

GPT-4 has better paragraph structure. It organizes its ideas into two parts, professional and personal. GPT-3.5 seems to combine the two which made it harder for me to pickout personal and professional.

—Male, GenX

Platform one was able to recall my previous conversation.

—Male, Millennial

GPT-3.5 felt like it had some irrelevant information while GPT-4 had some of the same info, but it was organized in a way that made it easier to skim through and had a good intro so I had a general idea, then it got more in depth which I liked.

—Male, Gen Z

Bard vs GPT-3.5: Bard edges ahead

When Bard is shown next to GPT-3.5, Bard is preferred 52% of the time and GPT-3.5 is preferred 48% of the time. Users appreciated Bard’s ability to present the information clearly without contributing to a cognitive overload.

The formatting was better. I have ADHD and it's harder for me to read a big block of text that isn't separated into different sections.

—Male, Millennial

I like how the Bard cited a source it got the information from, which allows the user to dig deeper into the topic and make their own judgements.

—Male, Gen Z

I liked the length of Bard's response better and it just felt like it answered the question more succinctly.

—Nonbinary, Millennial

Implications

- Newcomer Claude is holding steady based on our last benchmark released on August 1. This platform is one to watch as more Pulse IQ data comes in, and as the platform's model learns from higher usage.

- Users continue to cite readability and formatting as strong drivers for picking responses. Succinct, well-formatted content, and bulleted lists help users process information easily, especially for unfamiliar topics.

Member discussion