Users are wary of proprietary data in AI's hands

The increasing use of AI has raised numerous apprehensions surrounding the privacy of personal data, both from businesses and individuals. AI systems depend on vast volumes of data to acquire knowledge and formulate predictions, thereby prompting concerns about the gathering, handling, and safeguarding of sensitive information that individuals or companies may provide. Users do seek a more tailored experience from AI, and often find themselves sharing a lot of personal identification in their queries, as we indicated in our earlier Pulse IQTM Report, “People Willing To Give Data to AI Platforms for Personalization.”

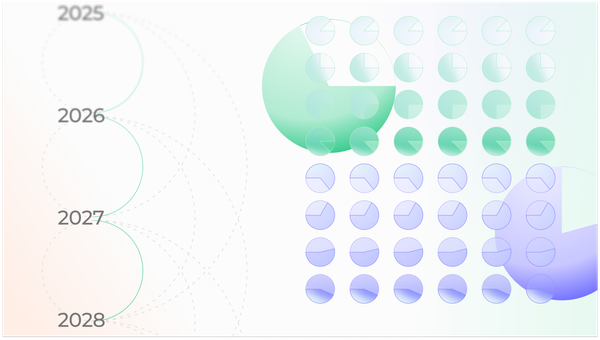

However, in the context of work, given the proprietary nature of information AI users have access to, privacy concerns get more complicated. In our recent work-focused Pulse IQ research, we asked participants, “How concerned are you about the possibility of putting proprietary information or customer data (for example, personally identifiable information) into an AI tool?” An overwhelming 62% of users expressed moderate to high concern, underscoring the paramount importance of data security and privacy in professional contexts, especially for those in finance, legal, HR, or other roles where they may have access to highly confidential business or customer information.

Despite the concerns users express over possible risks around putting sensitive business or customer information into an AI tool, when asked "Have you received training from your employer about security and privacy when using AI?" only 14% of study participants said yes. This indicates that workers are largely being left to their own judgment about how and when to use AI safely at work.

One of the primary privacy apprehensions linked to AI revolves around the possibility of data breaches and unauthorized access to personal information. Given the vast amounts of data AI systems collect and process, users perceive a significant risk that this data could end up in the wrong hands, either due to hacking incidents or other security breaches.

“The data can be stolen by hackers and the data could be used to commit fraud or identity theft.”

—Roody, Gen X, GPT-3.5

“I'm very cautious online with personal info already. So I'm most certainly not in a comfortable place to give AI sensitive info. I work for a vulnerable population and I would never put them more at risk.”

—Nichole, Gen X, Bing

“You never know who's at the other end of the AI, managing it, for example Bard clearly states that your conversations will be reviewed.”

—Bulinda, Gen X, Bard

“AIs are owned by big tech, and we know they love to sell data.”

—Leighann, Millennial, GPT-3.5

There is still so much that users don’t know and understand about AI, heightened by the fact that AI platforms continue to develop and evolve. The media discourse and popular culture representation of dystopian futures adds to users' unease.

“We have all watched AI movies, and to be honest, having personal information given to AI is a risk. You never know when someone hacks in and takes everything out! We have all watched AI movies, and to be honest, having personal information given to AI is a risk. You never know when someone hacks in and takes everything out!”

—Prashant, Gen Z, Bard

“AI is so new and I think anything could happen since it's so new. I am concerned but not very much because from what I have seen I am very impressed and I think the chances of some kind of breach of data would not be an issue.”

—Larry, Gen X, GPT-3.5

“Some AI such as Bard are still in development, and as such, conversations are being manually reviewed by the developers.”

—Marie, Gen Z, Bard

Implications

AI use at work is on the rise, workers are wary, and corporate training lags behind actual use.

- AI users are at least somewhat aware that there are risks associated with sharing business confidential information when using AI for work. They also seem to be more concerned about potentially exposing risky business or customer data than they are about sharing their own private details.

- We had users across many workplaces and industries participate in this study, from hospitals to home improvement businesses, software developers to finance professionals – we're seeing people from all backgrounds, even those that aren't usually thought of as "technical" embracing AI technology. This means that businesses of all sizes and in all industries should look to understand how their employees may use AI and train for it accordingly.

- Many businesses are not providing any training on how to use AI securely for work-related tasks. Combined with the rising number of people using AI at work, this magnifies corporate risks. AI is a useful tool for many work tasks, and so businesses looking to be competitive and innovative should walk the line between being security-aware without completely eliminating workers' access to AI.

Member discussion