Hallucinations - an industry term used to describe the situation where an AI platform generates responses that are inaccurate or don't make sense but are presented as factual - is an area where platforms and the public may not see the same thing.

Latest

The Human Factor In Artificial Intelligence

Artificial intelligence is eating the world. The pace at which machines have recently been able to achieve human or even superhuman performance on tasks previously requiring human intelligence has been breathtaking. AI has the potential to become a profoundly useful assistant and tool in almost every aspect of human life,

The Intersection of UX and AI: Implications of LLMs and Generative AI in UX Design

Key webinar takeaways from a discussion around the intersection of UX and AI. Explore the implications of LLMs and generative AI in UX design.

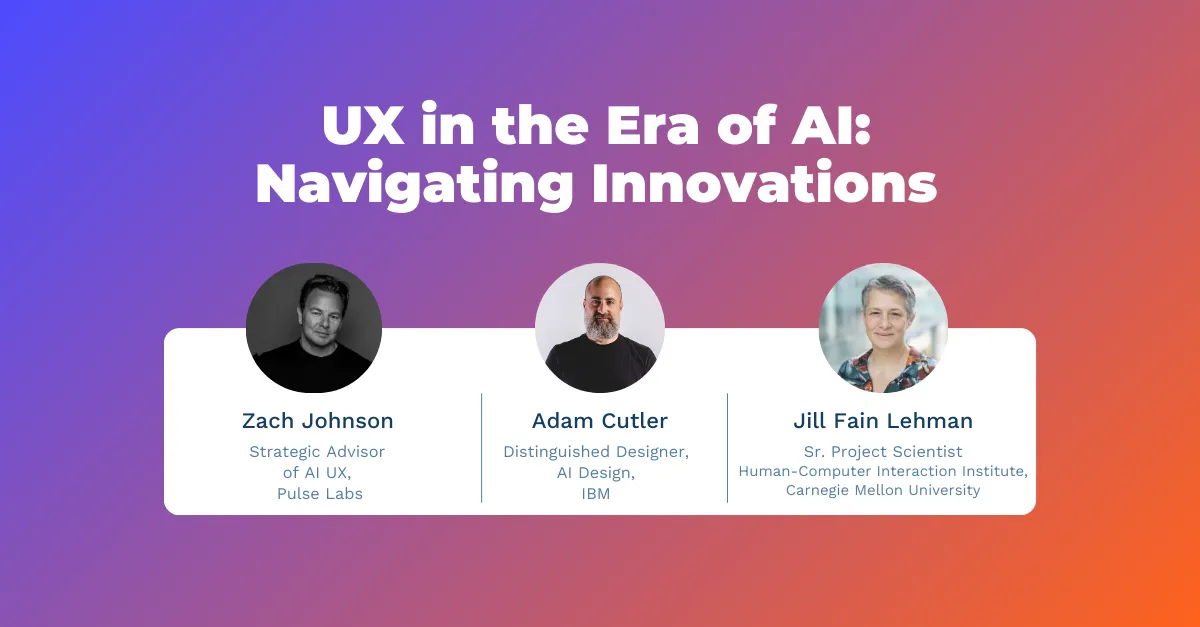

Webinar Recording- UX in the Era of AI: Navigating Innovations

Sign in or subscribe for access to the webinar recording and more free UX content from Pulse Labs. This insightful 45-minute conversation hosted by Pulse Labs, is designed for UX researchers looking to navigate the rapidly evolving landscape of AI-driven applications. During the session, we hosted a dynamic, moderated discussion

Google Cloud Next 2024: AI Innovations to Supercharge UX Research

Based on this week’s keynote address, Google Cloud Next 2024 is slated to be a hotbed of cutting-edge AI solutions, and many of these advancements represent potential for the User Experience research community. We’ve identified a few key takeaways that could inform future practices to gain UX insights