54% of AI users are performing work or school tasks, posing risk of data leaks

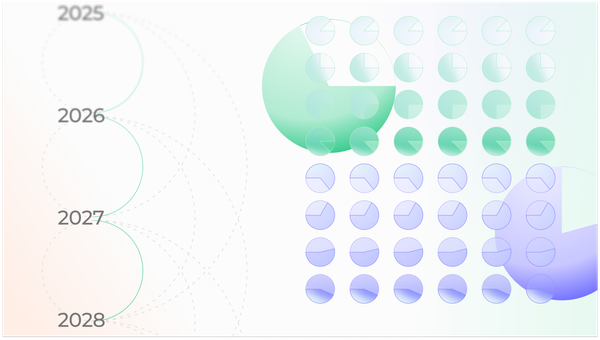

For the first time in Pulse IQ's research, more than half of users surveyed indicated they use AI to increase productivity at work or school. Tools like ChatGPT, Bard, Bing AI, and Claude are quickly becoming go-to productivity tools that are used as a personal assistant, editor in chief, or quick information source. Alarmingly, few companies have strategies in place to tackle risks posed by AI, and so the use of this technology is rapidly outpacing organizations' ability to manage the associated risks.

The top three reasons people cited using AI at work or school were:

1. Information and Research

26% use AI to get information quickly, despite occasional hallucinations and incorrect data.

Gen Z

AI has helped teach me information on things like Biology and Chemistry when I felt like my professors were going too quickly. Although AI is not 100% accurate in its information, I still really like it's personalized study method.

I ask broad questions and allow them to answer me in different ways, so I can get ideas for the direction I want to go with essays and discussion topics.

Millennials

My workplace desktop has a little software issue which concerns retrieving documents. I have to ask AI to help me solve the issue by telling me what to do.

My work requires a lot of research and I make use of Bing most times for this because it has this feeling different from using normal search engines.

2. Better, faster writing

16% find AI a key aid for elevating language proficiency, grammatical accuracy, tone and professionalism. For these users, AI helps them find the right tone for their audience and produce content more quickly.

Millennials

I had a presentation that was due at work. I remember using ChatGPT to arrange and finalize my work so quickly before the deadline. It was a wonderful presentation as well.

AI helped me curate and fix the formatting and grammatical errors in my research article write-up.

AI helps me craft language that is more appropriate to a mass audience. I'm an engineer and often get hung up on technical details. AI helps me convert the technical details into marketing speak.

3. Problem Solving

12% use AI to solve problems that are outside their knowledge base.

Millennials

I needed to create an SEO (search engine optimization) for a newly launched website for my company, I didn't know where to begin so I threw it at chatGPT and I got the exact solution and direction I have been searching for in the past 2 weeks.

Gen X

Use all the time with marketing posts and ways to update my clients website. Also helps with coding when I need to manually add something.

Implications

For businesses

If you haven't already, add training on AI use to your employee training ASAP! As more workers learn about and use AI, it's unlikely that all will know the implications of feeding sensitive business information into these tools. Especially if using free, publicly available versions of this software, workers could compromise customer data or sensitive business information.

In April, Cybersecurity Dive released a report about risks to employers with ChatGPT use at work – chief among them being that if sensitive information is entered, that information will become part of the chatbot's data model and may result in data leakage. Businesses should create data privacy policies specific to AI, educate employees about the risk of feeding sensitive data into these tools, and treat it with the same importance as any other security training.

In addition to the risk of leaking sensitive information, AI is making bad actors more savvy and lowering the barrier for hackers and fraudsters to enter the market. Derner & Batistic's May report, Beyond the Safeguards: Exploring the Security risks of ChatGPT, shows how AI can be used to hone phishing campaigns ("scammer grammar" may be a thing of the past), generate disinformation, drive spam, impersonate people, generate malicious code, disclose personal information, or even be manipulated by fraudulent services.

Although most security research has focused on ChatGPT, businesses should inform and educate employees about the risks for all AI tools – many of the risks that come with ChatGPT also apply to Bard, Bing AI, and Claude.

For schools

AI's impact cannot be ignored, and now is the time to figure out how to teach students to use these tools safely in a way that enhances rather than compromises learning. In December of 2022, The Atlantic pronounced The College Essay is Dead. Since then, a myriad of news articles have emerged touting the impact of generative AI on college and high school essays. Leaders in K-12 and higher education have expressed concern about AI's impact on students' learning, their data privacy, and the future of education in a world where assignments can be generated with a few prompts.

The US Department of Education has tried to propose creative solutions. In May, they released an-in-depth report around the future of AI policies for education, ways it can be incorporated into curricula, and how AI can be used to improve teaching and professional development.

Because the needs of education are different from the needs of businesses, researchers, and individual adult users, education-specific AI models that have safeguards built in for students are a possible answer. What would a model like this look like? Given the guidance of the Department of Education, a student-centered approach to AI would incorporate these six things:

- Alignment of the AI model to educators' vision for learning

- Data privacy

- Notice and explanation (of how the AI is detecting patterns and making recommendations)

- Algorithmic discrimination protections

- Safe and effective systems (designed for diverse learners and varied educational settings)

- Human alternatives, considerations, and feedback

Member discussion