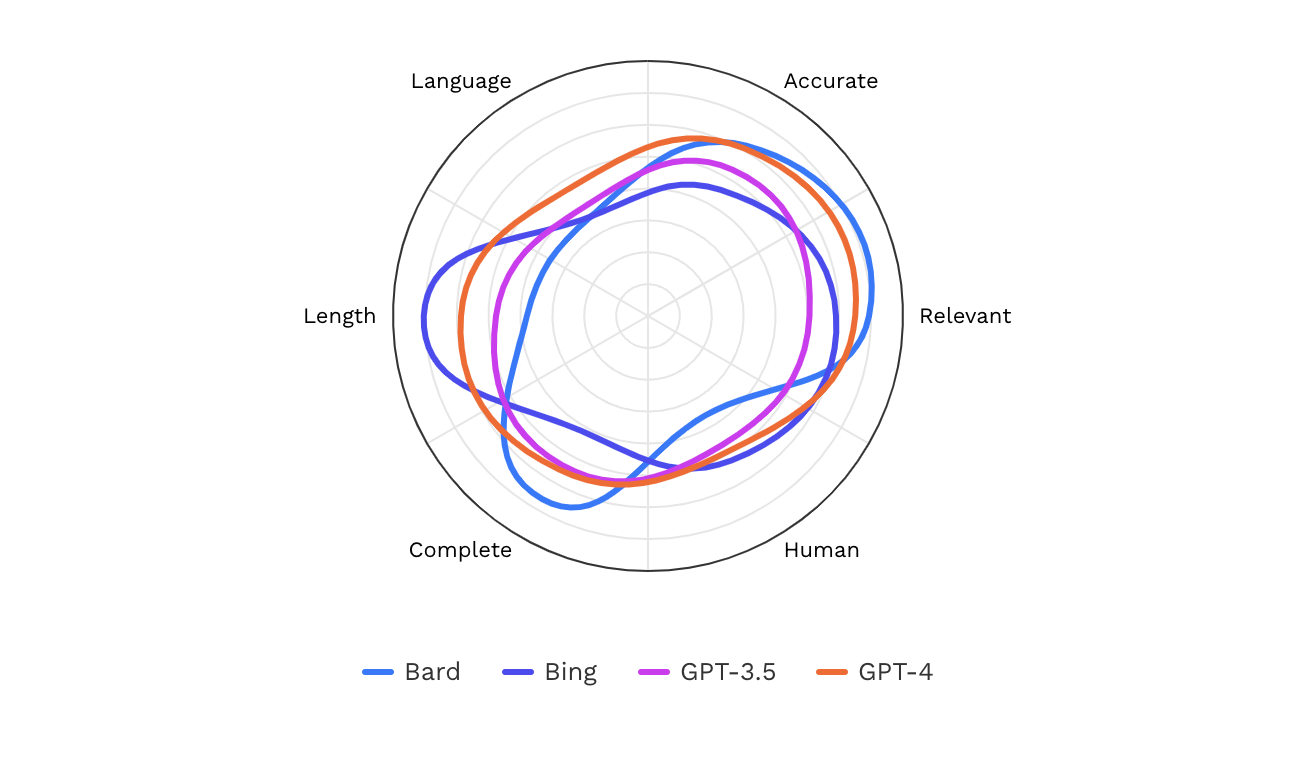

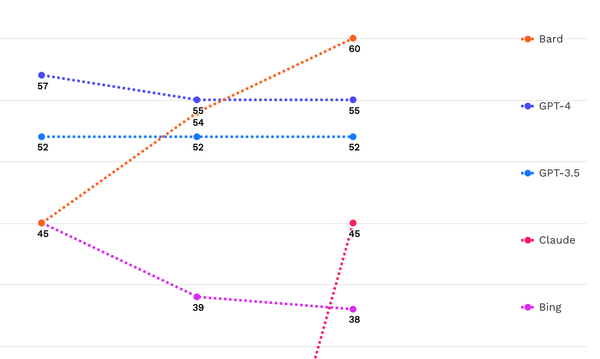

In blinded side-by-side testing, users select their preferred AI response to a series of queries and describe why. As a result, we see how the four platforms compare across hundreds of users and various question types.

- Bard stood out for responses that were viewed as most complete, accurate, and relevant.

- GPT-4 outscored GPT-3.5 in every category. Notably for response length. GPT-4's responses were also seen as more accurate and relevant than GPT-3.5.

- Bing's standout area was in providing responses of the right length but also edged past GPT-4 on more human-like responses.

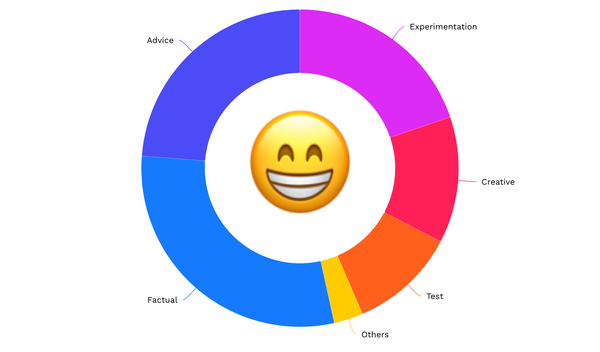

Member discussion